在这个故事中,我们将看到如何使用 LangChain and Chainlit. 这个聊天机器人回答有关员工相关政策的问题,比如e.g. 产假、危险报告或培训政策、行为准则等等.

Since this project was created as a POC, 我们决定通过劫持这个工具中使用的提示符来尝试一下, 这样聊天机器人就会在回答问题的同时偶尔讲一些笑话. 所以这个聊天机器人应该有幽默感和某种“个性”。.

![]()

About Chainlit

Chainlit 是一个开源的Python / Typescript库,允许开发人员快速创建类似chatgpt的用户界面. 它允许你创建一个想法链,然后添加一个预先构建的, configurable chat user interface to it. It is excellent for web based chatbots.

Chainlit is much better suited for this task than Streamlit 这需要更多的工作来配置UI组件.

The code for the Chainlit library ccan be found here:

Chainlit - Build Python LLM apps in minutes ⚡️

Chainlit has two main components:

- back-end: it allows interact with libraries like LangChain, Llama Index and LangFlow and is Python-based.

- front-end它是一个基于typescript的React应用,使用材质UI组件.

A Quick Tour of Our HR Chatbot

我们的聊天机器人有一个UI,最初看起来像这样的光模式:

Initial Chatbot UI

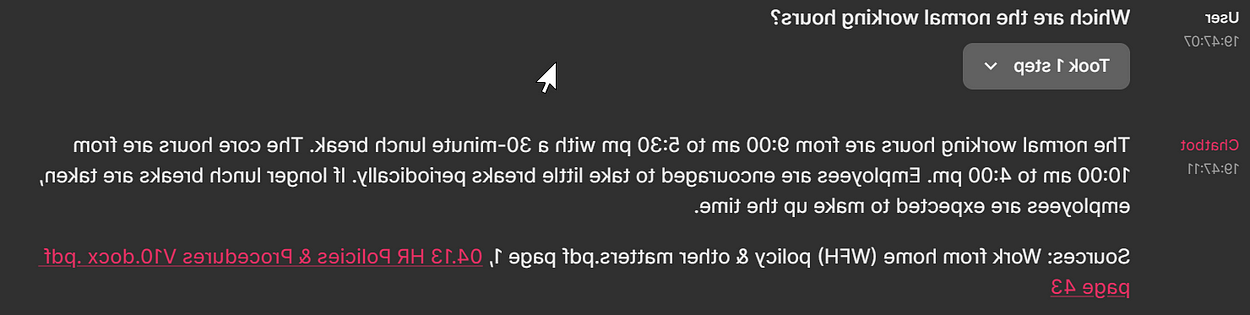

然后你可以输入你的问题,结果如下所示(使用暗模式):

Question on normal working hours

UI不仅显示问题和答案,还显示源文件. 如果找到了文本,pdf文件也可以点击,您可以查看其内容.

如果你展开LangChain链的步骤,你会看到:

Thought chain representation

The user interface (UI) also has a searchable history:

Searchable history

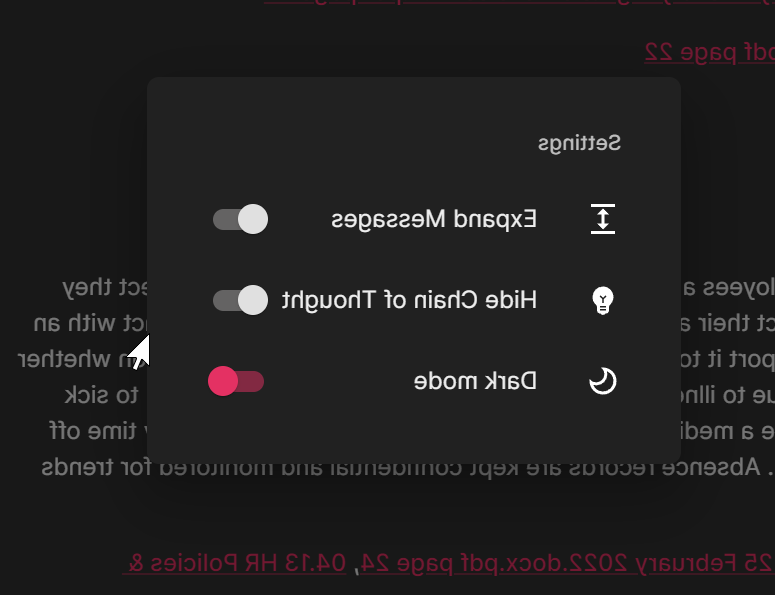

You can easily switch between light and dark mode too:

Open settings

Dark mode switch

A HR Chatbot that can tell Jokes

We have manipulated the chatbot to tell jokes, 特别是当他无法根据现有的知识库回答问题时. So you might see responses like this one:

Joke when no source found

Chain Workflow

There are two workflows in this application:

- setup workflow—用于设置表示文本文档集合的矢量数据库(在本例中为FAISS)

- 用户界面工作流——思想链交互

Setup Workflow

你可以使用下面的BPMN图来可视化设置:

Setup workflow

These are the setup workflow steps:

- 该代码首先列出一个文件夹中的所有PDF文档.

- The text of each page of the documents is extracted.

- The text is sent to Open AI embeddings API.

- A collection of embeddings is retrieved.

- The result is accumulated in memory.

- 累积嵌入的集合被持久化到磁盘.

User interface workflow

This is the user interface workflow:

User interface workflow

Here are the user workflow steps:

- The user asks a question

- 对向量数据库执行相似度搜索查询(在我们的示例中) FAISS — Facebook AI Similarity Search)

- The vector database returns typically up to 4 documents.

- 返回的文档作为上下文发送到ChatGPT (model: gpt-3).5-turbo-16k) together with the question.

- ChatGPT returns the answer

- The answer gets displayed on the UI.

Chatbot Code Installation and Walkthrough

整个聊天机器人代码可以在这个Github存储库中找到:

http://github.com/onepointconsulting/hr-chatbot

Chatbot Code Walkthrough

The configuration of most parameters of the application is in file:

http://github.com/onepointconsulting/hr-chatbot/blob/main/config.py

In this file we set the FAISS persistence directory, the type of embeddings (“text-embedding-ada-002”, the default option) and the model (“gpt-3.5-turbo-16k”)

The text is extracted and the embeddings are processed in this file:

http://github.com/onepointconsulting/hr-chatbot/blob/main/generate_embeddings.py

The function which extracts the PDF text per page (load_pdfs),可以通过此链接找到源文件和页面元数据:

http://github.com/onepointconsulting/hr-chatbot/blob/main/generate_embeddings.py#L22

And the function which generates the embeddings (generate_embeddings) can be found in line 57:

http://github.com/onepointconsulting/hr-chatbot/blob/main/generate_embeddings.py#L57

The file which then initialises the vector store 并创建了一个LangChain问答链:

http://github.com/onepointconsulting/hr-chatbot/blob/main/chain_factory.py

The function load_embeddings is one of the most important functions in chain_factory.py.

此函数加载PDF文档以支持Chainlit UI中的文本提取. 如果没有持久的嵌入,则生成嵌入. 如果嵌入是持久化的,那么它们将从文件系统加载.

这种策略避免了过于频繁地调用嵌入API,从而节省了资金.

And the other important function in chain_factory.py is the function create_retrieval_chain 加载QA链(问答链)的函数:

This function creates the QA chain with memory. In case the humour parameter is true, 然后使用一个被操纵的提示——在某些场合往往会制造笑话.

We had to inherit the ConversationSummaryBufferMemory 内存类,使内存不会抛出与未找到键相关的错误.

以下是我们在QA链中使用的提示文本摘录:

给定下列一份长文件的摘录部分和一个问题, create a final answer with references (“SOURCES”). 如果你知道一个十大网博靠谱平台这个话题的笑话,一定要把它写进回复里.

如果你不知道答案,就说你不知道,然后编一些十大网博靠谱平台这个话题的笑话. Don’t try to make up an answer.

ALWAYS return a “SOURCES” part in your answer.

The actual part of the code related to ChainLit is in this file:

http://github.com/onepointconsulting/hr-chatbot/blob/main/hr_chatbot_chainlit.py

The Chainlit library works with Python decorators. LangChain QA链的初始化是在一个修饰函数中完成的,你可以在这里找到:

http://github.com/onepointconsulting/hr-chatbot/blob/main/hr_chatbot_chainlit.py#L56

This function loads the vector store using load_embeddinges(). The QA chain is then initialised by the function create_retrieval_chain and returned.

The last function process_response converts the LangChain result dictionary to a Chainlit Message object. 此方法中的大多数代码都试图提取文本中有时以意外格式出现的源. Here is the code:

http://github.com/onepointconsulting/hr-chatbot/blob/main/hr_chatbot_chainlit.py#L127

Key Takeaways

Chainlit 是不断增长的LangChain生态系统的一部分,它可以让你快速地开发出漂亮的基于web的聊天应用程序. It has some customization options, like e.g. allowing to quickly integrate with authentication platforms or to persist data.

However we found it a bit difficult to remove the “Built with Chainlit的脚注,并以一种相当“hacky”的方式结束,这可能不是很干净. 目前还不清楚如何在不创建分支或使用肮脏hack的情况下完成深度UI定制.

Another problem that we faced was how to reliably interpret the LLM output — especially how to extract the sources from the reply. In spite of the prompt telling the LLM to:

create a final answer with references (“SOURCES”)

It does not do that at all times. And this causes some unreliable source extraction. This problem can be however addressed by OpenAI’s Function Calling functionality 使用它可以指定输出格式,但在编写代码时还不可用.

On the plus side, LLM允许你创建一个有味道的聊天机器人,如果你愿意以创造性的方式改变提示. 人力资源助理讲的笑话不太好, 然而,它们证明了你可以创造“有味道的”人工智能助手, 这最终会更吸引终端用户,也更有趣.